Abstract

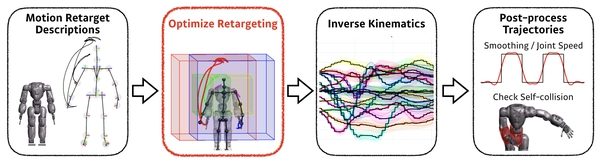

Imitation of the upper body motions of human demonstrators or animation characters to human-shaped robots is studied in this paper. We present a pipeline for motion retargeting by defining the joints of interest (JOI) of both the source skeleton and the target humanoid robot. To this end, we deploy an optimization-based motion transfer method utilizing link length modifications of the source skeleton and a task (Cartesian) space fine-tuning of JOI motion descriptors. To evaluate the effectiveness of the proposed pipeline, we use two different 3-D motion datasets from three human demonstrators and an Ogre animation character, Bork, and successfully transfer the motions to four different humanoid robots: DARwIn-OP, COmpliant HuMANoid Platform (COMAN), THORMANG, and Atlas. Furthermore, COMAN and THORMANG are actually controlled to show that the proposed method can be deployed to physical robots.

Additional Content

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.