Abstract

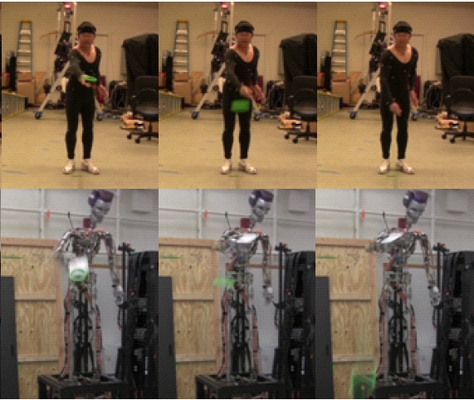

In this paper, we present a humanoid robot which extracts and imitates the person-specific differences in motions, which we will call style. Synthesizing human-like and stylistic motion variations according to specific scenarios is becoming important for entertainment robots, and imitation of styles is one variation which makes robots more amiable. Our approach extends a learning from observation (LFO) paradigm which enables robots to understand what a human is doing and to extract reusable essences to be learned. The focus is on styles in the domain of LFO and the representation of them using the reusable essences. In this paper, we design an abstract model of a target motion defined in LFO, observe human demonstrations through the model, and formulate the representation of styles in the context of LFO. Then we introduce a framework of generating robot motions that reflect styles which are automatically extracted from human demonstrations. To verify our proposed method we applied it to a ring toss game and generated robot motions for a physical humanoid robot. Styles from each of three random players were extracted automatically from their demonstrations, and used for generating robot motions. The robot imitates the styles of each player without exceeding the limitation of its physical constraints, while tossing the rings to the goal.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.