Abstract

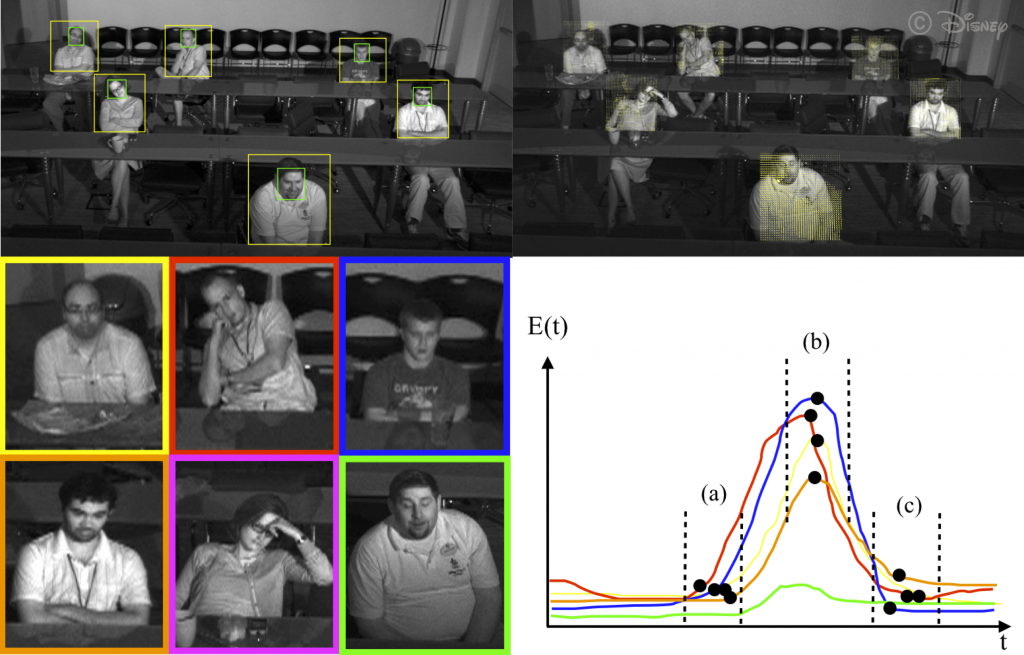

We propose a method of representing audience behavior through facial and body motions from a single video stream and use these motions to predict the rating for feature-length movies. This is a very challenging problem as i) the movie viewing environment is dark and contains views of people at different scales and viewpoints; ii) the duration of feature-length movies is long (80-120 mins) so tracking people uninterrupted for this length of time is an unsolved problem; and iii) expressions and motions of audience members are subtle, short and sparse making labeling of activities unreliable. To circumvent these issues, we use an infra-red illuminated test-bed to obtain a visually uniform input. We then utilize motion-history features which capture the subtle movements of a person within a pre-defined volume and then form a group representation of the audience by a histogram of pair-wise correlations over small time windows. Using this group representation, we learn a movie rating classifier from crowd-sourced ratings collected by rottentomatoes.com and show our prediction capability on audiences from 30 movies across 250 subjects (> 50 hours).

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.