Abstract

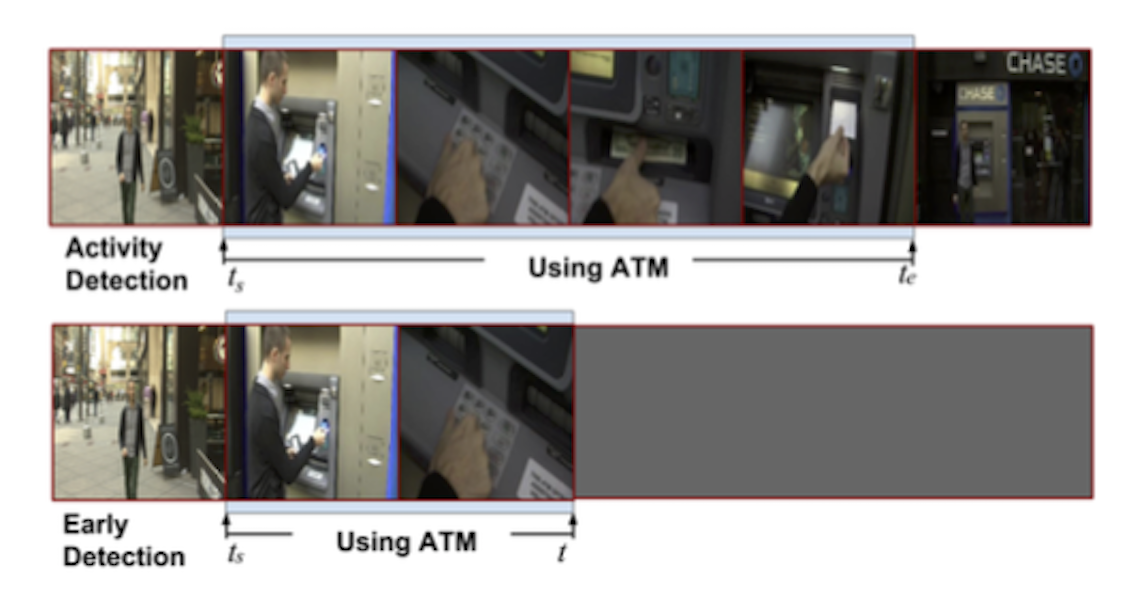

In this work, we improve training of temporal deep models to better learn activity progression for activity detection and early detection tasks. Conventionally, when training a Recurrent Neural Network, specifically a Long Short-Term Memory (LSTM) model, the training loss only considers classification error. However, we argue that the detection score of the correct activity category, or the detection score margin between the correct and incorrect categories, should be monotonically non-decreasing as the model observes more of the activity. We design novel ranking losses that directly penalize the model on violation of such monotonicities, which are used together with classification loss in training of LSTM models. Evaluation on ActivityNet shows significant benefits of the proposed ranking losses in both activity detection and early detection tasks.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.