Abstract

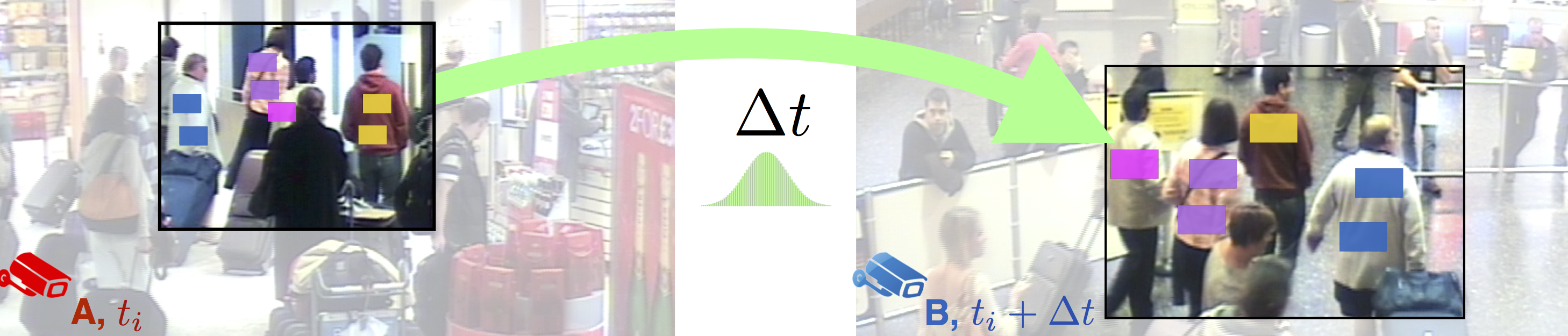

Re-identification methods often require well aligned, unoccluded detections of an entire subject. Such assumptions are impractical in real world scenarios, where people tend to form groups. To circumvent poor detection performance caused by occlusions, we use fixed regions of interest and employ codebook-based visual representations. We account for illumination variations between cameras using a coupled clustering method that learns per-camera codebooks with entries that correspond across cameras. Because predictable movement patterns exist in many scenarios, we also incorporate temporal context to improve re-identification performance. This includes learning expected travel times directly from data and using mutual exclusion constraints to encourage solutions that maintain temporal ordering. Our experiments illustrate the merits of the proposed approach in challenging re-identification scenarios including crowded public spaces.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.