Abstract

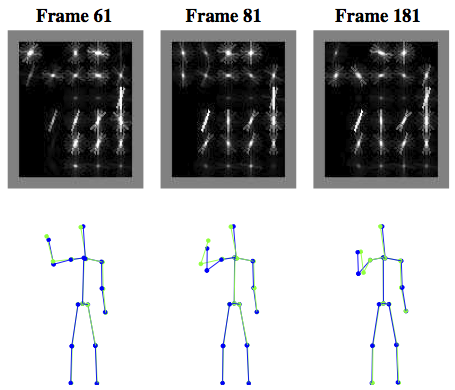

Discriminative regression models have proved effective for many vision applications (here we focus on 3D full-body, and head pose estimation from image and depth data). However, dataset bias is common and is able to significantly degrade the performance of a trained model on target test sets. As we show, covariate shift, a form of unsupervised domain adaptation (USDA), can be used to address certain biases in this setting but is unable to deal with more severe structural biases in the data. We propose an effective and efficient semi-supervised domain adaptation (SSDA) approach for addressing such more severe biases in the data. Proposed SSDA is a generalization of USDA, that is able to effectively leverage labeled data in the target domain when available. Our method amounts to projecting input features into a higher dimensional space (by construction well suited for domain adaptation) and estimating weights for the training samples based on the ratio of test and train marginals in that space. The resulting augmented weighted samples can then be used to learn a model of choice, alleviating the problems of bias in the data; as an example, we introduce SSDA twin Gaussian process regression (SSDA-TGP) model.With this model we also address the issue of data sharing, where we are able to leverage samples from certain activities (e.g., walking, jogging) to improve predictive performance on very different activities (e.g., boxing). In addition, we analyze the relationship between domain similarity and effectiveness of proposed USDA versus SSDA methods. Moreover, we propose a computationally efficient alternative to TGP (Bo and Sminchisescu 2010), and it’s variants, called the direct TGP. We show that our model outperforms a number of baselines, on two public datasets: HumanEva andETHFace PoseRange Image Dataset. We can also achieve 8–15 times speedup in computation time, over the traditional formulation of TGP, using the proposed direct formulation, with little to no loss in performance.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.