Abstract

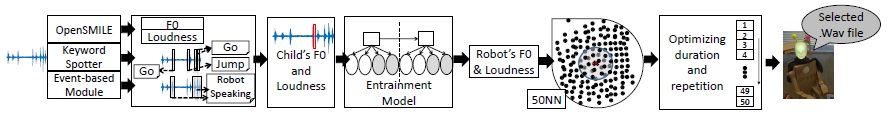

Synchrony is an essential aspect of human-human interactions. In previous work, we have seen how synchrony manifests in low-level acoustic phenomena like fundamental frequency, loudness, and the duration of keywords during the play of child-child pairs in a fast-paced, cooperative, language-based game. The correlation between the increase in such low-level synchrony and increase in enjoyment of the game suggests that a similar dynamic between child and robot coplayers might also improve the child’s experience. We report an approach to creating on-line acoustic synchrony by using a dynamic Bayesian network learned from prior recordings of child-child play to select from a predfine ned space of robot speech in response to real-time measurement of the child’s prosodic features. Data were collected from 40 new children, each playing the game with both a synchronizing and non-synchronizing version of the robot. Results show a signifi cant order effect: although all children grew to enjoy the game more over time, those that began with the synchronous robot maintained their own synchrony to it and achieved higher engagement compared with those that did not.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.